A comprehensive guide to sampling theory and methods in data science, covering simple random sampling, stratified sampling, cluster sampling, sampling error, and uncertainty quantification. Learn how to design effective sampling strategies and interpret results from sample data.

This article is part of the free-to-read Machine Learning from Scratch

Choose your expertise level to adjust how many terms are explained. Beginners see more tooltips, experts see fewer to maintain reading flow. Hover over underlined terms for instant definitions.

Sampling: From Populations to Observations

Sampling is the process of selecting a subset of observations from a larger population to make inferences about the whole. It forms the foundation of statistical inference, enabling data scientists to draw meaningful conclusions from manageable amounts of data without requiring complete enumeration of entire populations.

Introduction

In most real-world scenarios, examining every member of a population is impractical, prohibitively expensive, or simply impossible. Consider attempting to measure the average height of all adults in a country, test the quality of every product manufactured in a factory, or survey every customer who has ever used a service. Sampling provides a practical alternative by allowing us to study a carefully selected subset and use statistical methods to generalize our findings to the broader population.

The quality of insights derived from data analysis depends on how well the sample represents the population of interest. A poorly designed sampling strategy can introduce biases that invalidate conclusions, regardless of how sophisticated the subsequent analysis may be. This chapter explores the fundamental concepts of sampling, including the distinction between populations and samples, various sampling methodologies, and the inherent uncertainty that arises when working with sample data rather than complete populations.

Understanding sampling theory equips anyone who works with data to design better studies, interpret results more accurately, and recognize when conclusions drawn from data may be questionable due to sampling limitations. These skills prove essential whether collecting new data or working with existing datasets where the sampling mechanism affects what conclusions can be drawn.

Population versus Sample

At the heart of sampling theory lies the distinction between a population and a sample. The population represents the complete set of all individuals, items, or observations about which we want to draw conclusions. This might be all registered voters in a country, every transaction in a database, or all possible measurements from a manufacturing process. The population defines the scope of our inference: the group to which our conclusions should apply.

In contrast, a sample consists of the subset of observations we actually collect and analyze. When we survey 1,000 voters from a population of millions, conduct quality tests on 100 products from a production run of 10,000, or analyze data from 500 customers out of a customer base of 100,000, we are working with samples. The sample serves as a window into the population, providing the empirical evidence from which we make inferences about population characteristics.

Population characteristics are described by parameters, which are fixed but typically unknown values such as the true population mean () or population proportion (). Sample characteristics are described by statistics, which are calculated values such as the sample mean () or sample proportion () that we compute from observed data. A fundamental goal of statistical inference is to use sample statistics to estimate population parameters and quantify our uncertainty about those estimates.

The relationship between samples and populations introduces a key challenge: samples rarely perfectly mirror their parent populations. Random variation means that different samples from the same population will yield different statistics, a phenomenon known as sampling variability. Understanding and accounting for this variability forms the basis of inferential statistics and confidence interval construction.

Sampling Methods

The method used to select a sample profoundly affects its ability to represent the population accurately. Various sampling strategies exist, each with distinct characteristics, advantages, and appropriate use cases.

Simple Random Sampling

Simple random sampling gives every member of the population an equal probability of selection, making it the most straightforward probability sampling method. In practice, this might involve assigning each population member a unique number and using a random number generator to select the sample. For a digital dataset, this could mean randomly shuffling records and taking the first observations.

Simple random sampling has desirable theoretical properties by eliminating systematic selection bias and providing a basis for calculating standard errors and confidence intervals using classical formulas. However, it requires a complete list (sampling frame) of all population members, which may not be available or practical to construct. Additionally, simple random sampling may by chance produce samples that underrepresent or overrepresent important subgroups in the population.

Stratified Sampling

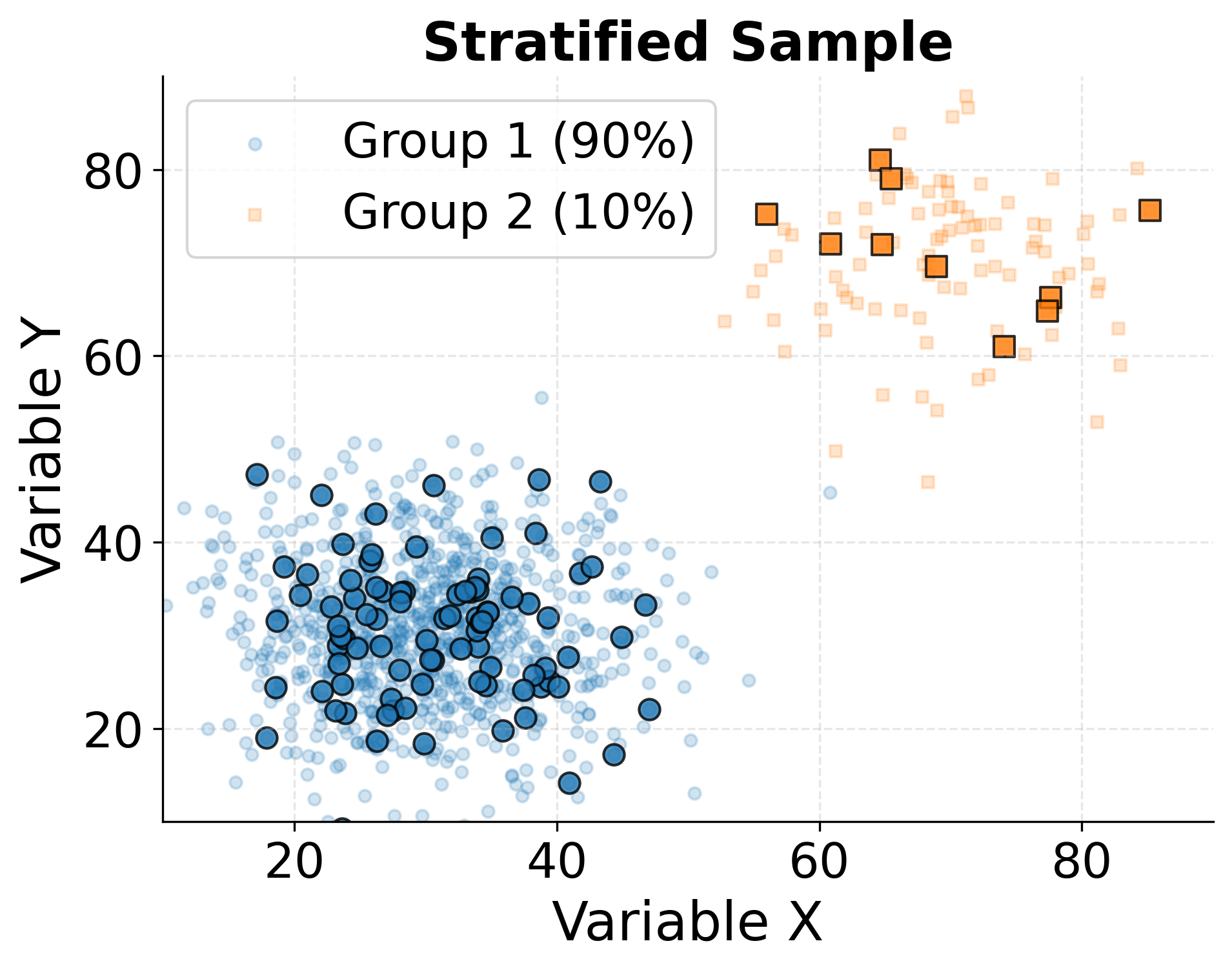

Stratified sampling addresses some limitations of simple random sampling by dividing the population into homogeneous subgroups (strata) based on characteristics like age, region, or income level, then drawing random samples from each stratum. The number of observations selected from each stratum can be proportional to the stratum's size in the population (proportionate allocation) or deliberately over- or under-sample certain strata (disproportionate allocation).

This approach offers several advantages. By ensuring representation from all important subgroups, stratified sampling can reduce sampling variability and produce more precise estimates for the same sample size. It also enables analysts to make reliable inferences about individual strata while still estimating overall population parameters. The cost is increased complexity in sample design and the need to define appropriate strata before sampling begins.

Cluster Sampling

Cluster sampling divides the population into groups (clusters) and randomly selects some clusters, then samples all or some members within chosen clusters. For example, a nationwide survey might randomly select cities (clusters), then survey households within those cities. This differs from stratified sampling in that we want clusters to be internally heterogeneous (mini-representations of the population) rather than homogeneous.

The primary motivation for cluster sampling is practical convenience and cost reduction. When populations are geographically dispersed, traveling to randomly selected individuals across the entire area becomes expensive. Concentrating data collection within selected clusters substantially reduces logistical complexity. However, observations within clusters often share characteristics, leading to higher sampling variability than simple random sampling of the same size. This design effect must be accounted for in analysis.

Convenience Sampling

Convenience sampling selects observations based on ease of access rather than probability-based selection mechanisms. Examples include surveying people walking by a particular location, analyzing data from volunteers who responded to an online advertisement, or using whatever data happens to be readily available.

While convenience sampling can provide quick, inexpensive preliminary insights, it frequently introduces substantial biases that limit generalizability. People who volunteer for surveys may differ systematically from those who do not. Locations convenient for data collection may attract non-representative populations. Convenience samples should be used cautiously, with careful consideration of how selection mechanisms might bias results, and ideally validated against probability-based samples when possible.

Sampling Error and Uncertainty

Even when using rigorous probability sampling methods, sample statistics will differ from true population parameters due to random variation in which observations happen to be selected. This difference between a sample statistic and the true population parameter is called sampling error.

Sampling error is not a mistake or fault in methodology but rather an inherent consequence of observing only part of the population. If we drew many different samples from the same population using the same sampling method, each would yield a slightly different sample mean, proportion, or other statistic. The sampling distribution describes the distribution of a statistic across all possible samples of a given size.

Understanding sampling distributions enables us to quantify uncertainty through standard errors and confidence intervals. The standard error measures the typical magnitude of sampling error: how much we expect sample statistics to vary around the true population parameter. Larger samples generally produce smaller standard errors, reflecting the intuition that more data provides more precise estimates.

The margin of error in opinion polls and surveys directly reflects sampling uncertainty, typically calculated as approximately two standard errors. When a poll reports 52% support with a margin of error of ±3%, this indicates that if we repeated the sampling process many times, roughly 95% of sample proportions would fall between 49% and 55%, assuming the same sampling methodology.

Distinguishing sampling error from other sources of error in data analysis is important. Non-sampling errors include measurement errors, nonresponse bias (when certain groups systematically fail to respond), coverage errors (when the sampling frame excludes parts of the population), and processing errors. Unlike sampling error, which decreases predictably with larger samples, non-sampling errors may not improve with increased sample size and sometimes worsen as data collection becomes more complex.

Visual Examples

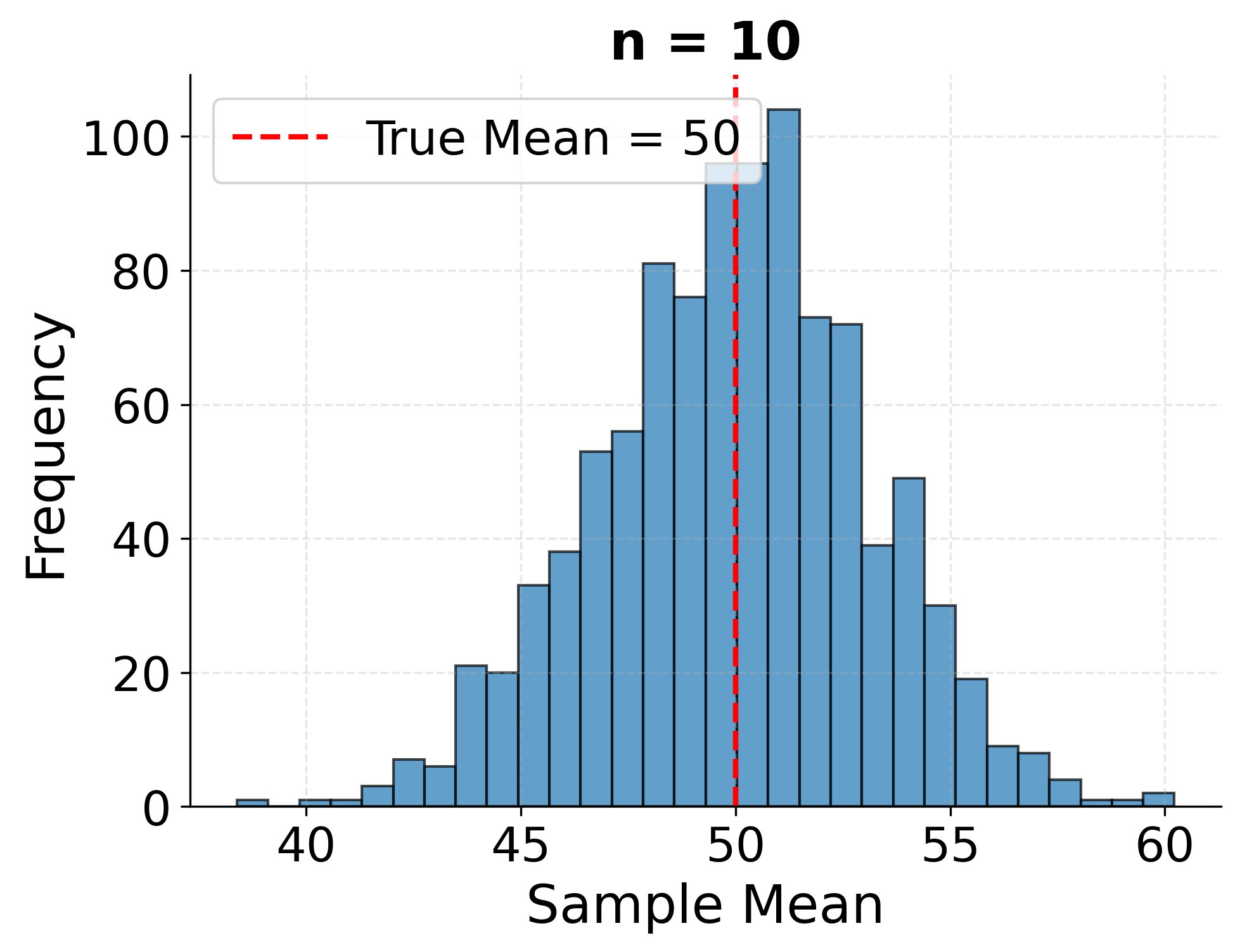

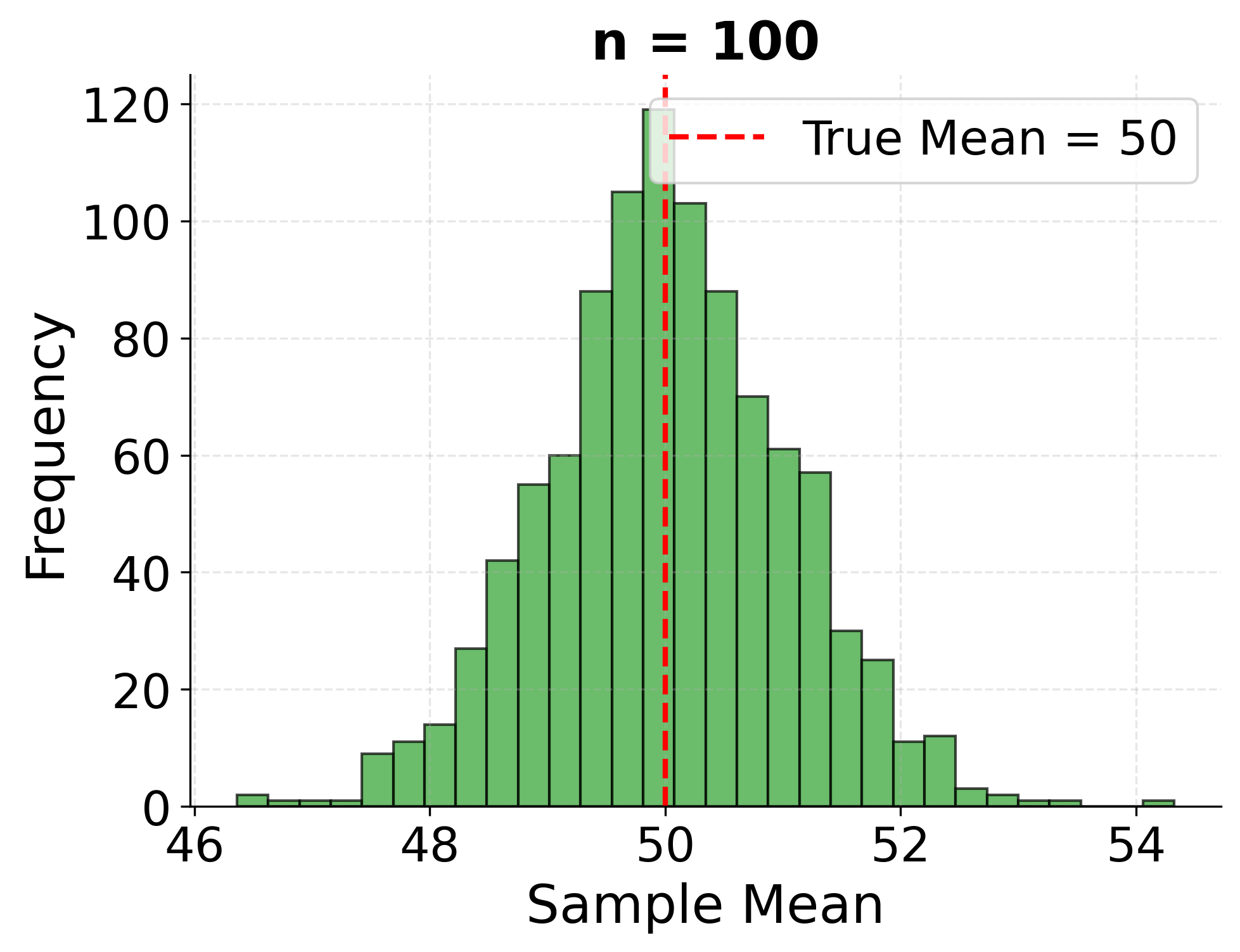

To build intuition about sampling variability and how different sample sizes affect our estimates, let's simulate drawing samples from a known population and observe how sample means behave.

Example: Sampling Distribution of the Mean

This example demonstrates the sampling distribution concept by repeatedly drawing samples of different sizes from a population and showing how sample means vary around the true population mean. Larger samples produce estimates that cluster more tightly around the true value.

Example: Comparing Sampling Methods

This visualization demonstrates how different sampling strategies can produce samples with varying degrees of representativeness. We'll compare simple random sampling with stratified sampling in a population containing distinct subgroups.

Practical Applications

Sampling methodologies find application across virtually every domain of data science and statistical analysis. Survey research organizations use stratified sampling to ensure accurate representation of demographic subgroups when polling public opinion, enabling reliable insights from samples of 1,000-2,000 respondents drawn from populations of millions. Market researchers employ cluster sampling when conducting in-person interviews or taste tests, selecting representative cities or neighborhoods rather than attempting to reach randomly selected individuals scattered across vast geographic areas.

In quality control and manufacturing, acceptance sampling determines whether to accept or reject production batches by inspecting random samples of items rather than testing every unit. This approach balances quality assurance needs with testing costs and destructive testing limitations. Clinical trials use careful sampling and randomization to ensure study participants represent broader patient populations, enabling generalizable conclusions about treatment effectiveness from manageable study sizes.

Machine learning practitioners encounter sampling when creating training, validation, and test sets from larger datasets. Stratified sampling ensures rare classes are adequately represented in each partition, while random sampling helps prevent data leakage and overfitting. When working with massive datasets, data scientists often perform exploratory analysis on carefully drawn samples before committing computational resources to full-dataset analyses.

A/B testing in technology companies relies on random sampling to assign users to experimental conditions, ensuring that observed differences in metrics can be attributed to the intervention rather than pre-existing differences between groups. The sampling mechanism directly affects the validity of causal inferences drawn from these experiments.

Limitations and Considerations

While probability-based sampling provides a rigorous framework for statistical inference, practical implementations face numerous challenges that can compromise the idealized theory. Real-world sampling frames rarely perfectly enumerate the target population. For example, phone survey sampling frames leave out people without phones, and online panels may underrepresent individuals with limited or no access to the internet. Administrative databases can also contain outdated or incomplete information. These coverage gaps mean that even perfectly random selection from a sampling frame may yield biased estimates for the true population of interest.

Nonresponse poses another challenge. When selected individuals decline to participate or cannot be reached, and if these nonrespondents differ systematically from respondents, bias enters the results. Low response rates affect many modern surveys, and weighting adjustments can only partially compensate when the characteristics driving nonresponse are not fully understood or measured.

The law of diminishing returns applies to sample size: doubling sample size reduces standard errors by only about 29% (since standard error decreases with the square root of sample size), while potentially doubling costs. Very large samples reduce sampling error to negligible levels, but this offers little value if non-sampling errors like measurement bias or systematic nonresponse bias dominate. Resources might be better spent improving data quality than simply collecting more observations.

Temporal considerations matter when populations evolve over time. A sample drawn from a customer database represents the population at that moment, but if customer characteristics change, inferences drawn from that sample may not apply to future periods. Seasonality, trends, and sudden shifts can all affect the representativeness of samples drawn at specific times.

Sampling theory provides tools for statistical inference but cannot substitute for clear thinking about causal relationships and study design. Representative samples enable us to estimate population characteristics accurately, but they do not by themselves establish causation or ensure that observed associations reflect meaningful relationships rather than confounding factors.

Summary

Sampling forms the bridge between the limited data we can collect and the broader populations about which we seek to draw conclusions. The fundamental distinction between populations and samples underlies all of statistical inference, with population parameters representing unknown truths and sample statistics serving as our estimates based on observed data. Different sampling methods (simple random sampling, stratified sampling, cluster sampling, and convenience sampling) offer different trade-offs between simplicity, cost, and statistical properties.

Sampling error represents the inherent uncertainty that arises from observing only part of a population rather than the whole. Understanding sampling distributions and standard errors enables practitioners to quantify this uncertainty through confidence intervals and hypothesis tests. However, sampling error is only one component of total survey error, and reducing it to negligible levels provides little value if non-sampling errors like measurement bias and nonresponse bias remain substantial.

Effective sampling requires careful attention to defining the target population, constructing comprehensive sampling frames, selecting appropriate sampling methods for the research context, and accounting for practical challenges like nonresponse and coverage gaps. When done well, sampling enables reliable, cost-effective inference from manageable amounts of data, a capability fundamental to modern data science and statistical practice.

Quiz

Ready to test your understanding of sampling methods and concepts? Take this quiz to reinforce what you've learned about populations, samples, and sampling strategies.

Comments